Most of your AB-tests will fail

by Max Al Farakh ·

Jun 19 2013

by Max Al Farakh ·

Jun 19 2013

A/B testing is overhyped. It is everywhere these days. It seems like many people in our industry perceive it as a magic tool that will boost their conversions with very little effort. It’s easy to get hooked with the A/B testing frenzy — the Internet is filled with success stories about how changing a stupid button color resulted in a 300% increase in conversions.

While A/B testing is a very powerful conversion optimization instrument, it requires lots of hard work but often it is just useless. Many of the 300%-boost success stories simply fall into these two groups:

- Going from absolute total crap to something decent increases your conversions. You don’t need an A/B test for that. Reading a book about basic design principles or about human brain will do you a much better service.

- A 100% increase could be going from 0.5% to 1%, which is still very good in terms of conversions, but way less mind blowing when you look at it this way.

No company have ever increased the revenue by changing an insignificant detail like a button color. None. Zero. Get over it.

Most of the tests produce no results

A successful split test is a rare thing. Most of the times both alternatives will convert almost the same, especially if you test insignificant changes.

From our experience, only one out of ten A/B tests produce results. For AppSumo it’s one out of eight. These numbers seem to be consistent across the industry.

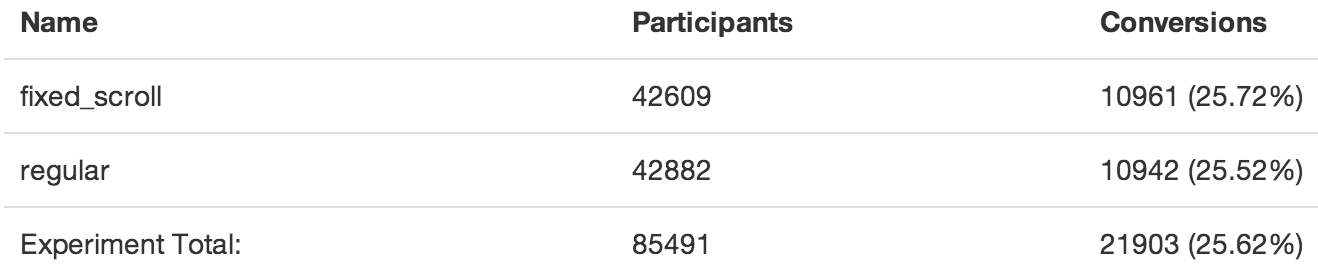

Here is an example of a test we ran not so long ago. Our landing pages are usually quite long, so we’ve made the call-to-action buttons fixed positioned, so they stay visible as the visitor scrolls the page. You can see it in action on the hosted help desk landing page. We were absolutely sure that it will convert better.

As you can see, the test had 85k participants and both alternatives have the exact same number of conversions. Insane.

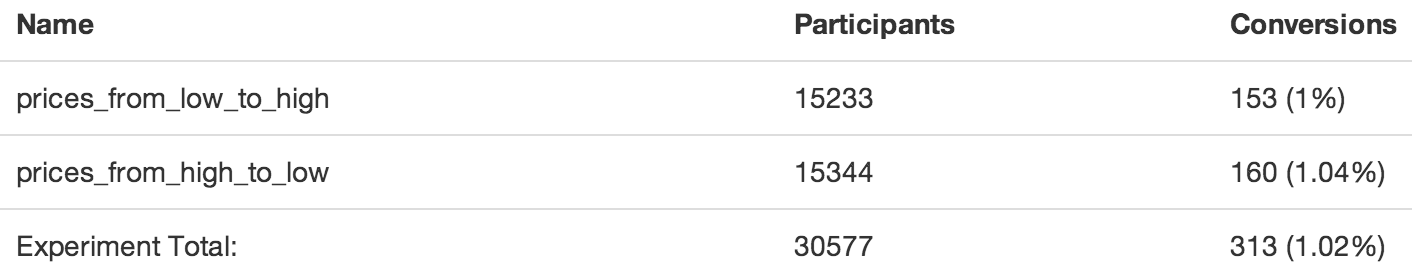

Another example: we sorted the plans in our pricing tables to go from the most expensive to the cheapest and vice versa, and tracked the most expensive plan orders.

Again 30k participants and zero difference.

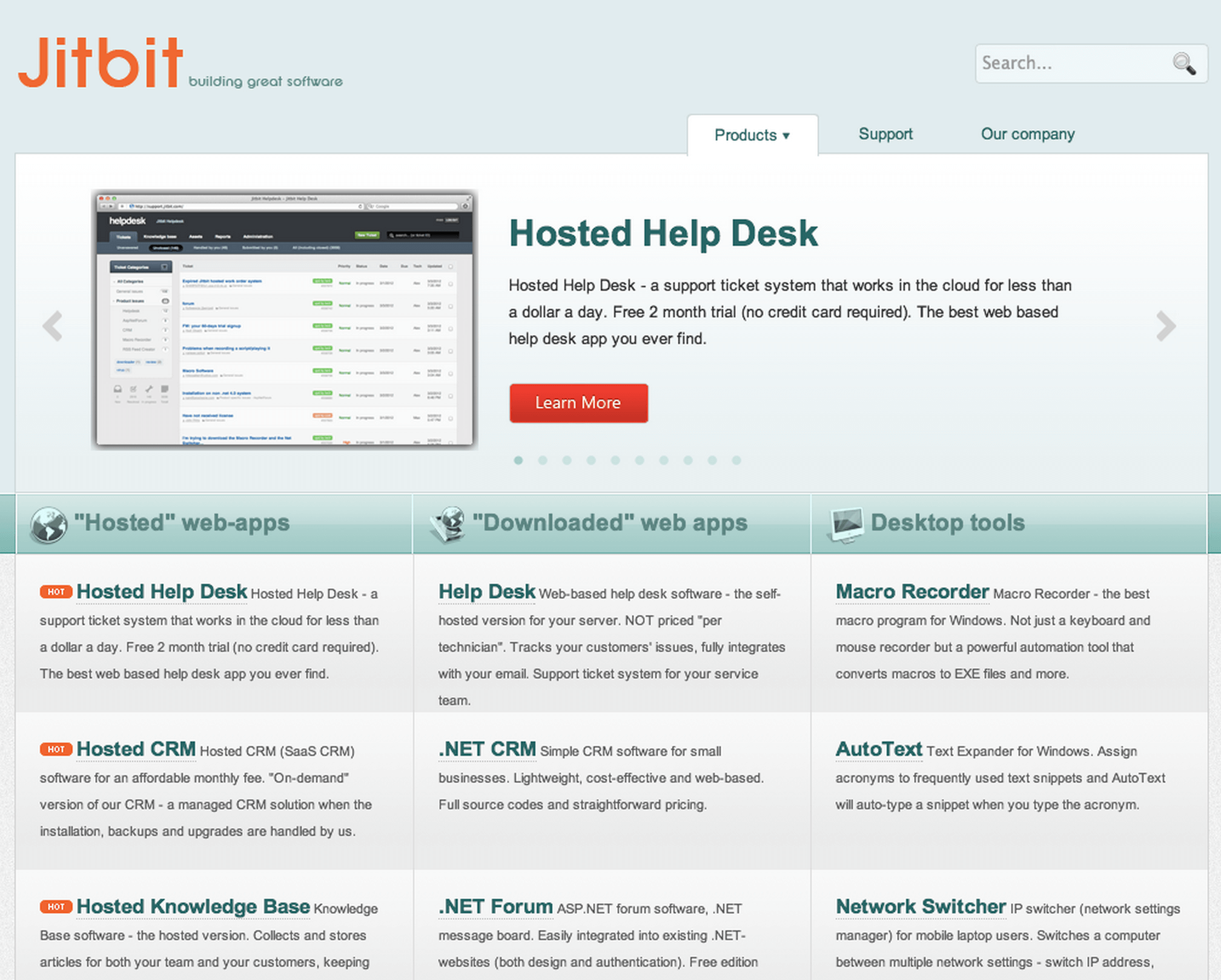

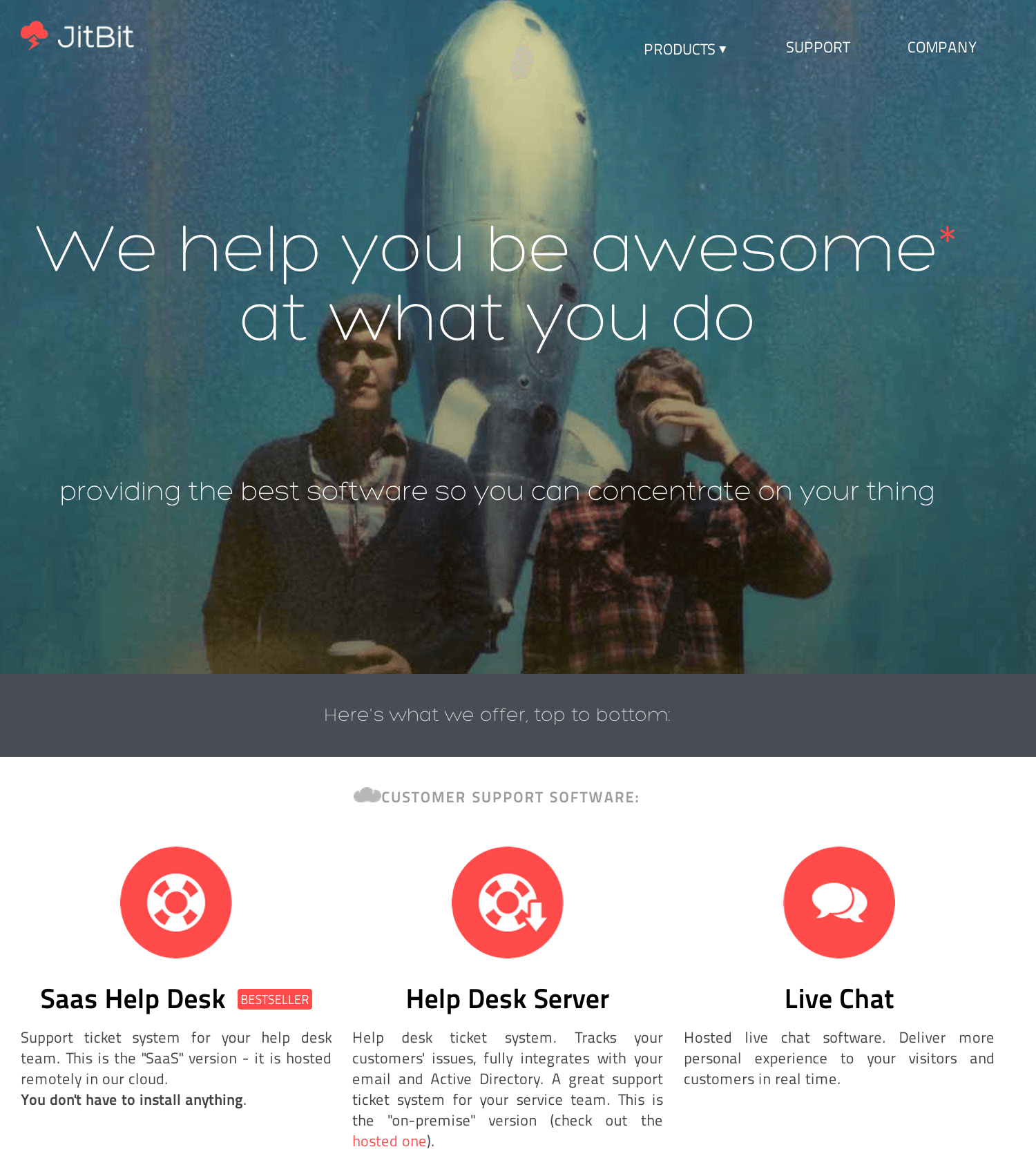

We even radically redesigned our entire web-site from this:

to this:

Do you think our conversions have changed? Hell no, nothing has changed. Still having high hopes about that call-to-action button color?

Statistical significance

Amazon has pioneered A/B testing in e-commerce. They have millions of daily visitors and this is the case when a small change could lead to a big increase. However most of us cannot boast such figures.

An A/B test result has to be statistically significant to be trusted, otherwise it’s no use. Significance depends on the total number of visitors who participated in that test. If you run your test on 20 visitors and one alternative has 2 conversions, while the other has 4, it doesn’t mean the second alternative is way better than the other.

So, if you don’t have many visitors on your website or you don’t run your tests for a very long time, A/B testing will be useless. Too many people finish tests too early, thereby making false conclusions.

Takeaways

- Most of your tests will fail. It’s sad, but it’s okay.

- Testing insignificant changes is time wasting.

- Don’t run tests on pages that have a small number of visitors.

- Don’t finish your tests before you have a statistically significant result. Use this handy calculator.

- Don’t ever rely on intuition. Test results are unpredictable.

Getting the most out of your tests

At this point, you are probably thinking about stopping (or never starting) doing A/B tests. So did we. Yes, it’s not that great and easy, as we hoped it would be, but it’s a too powerful tool to give it up. This is what we do to get most out of our tests:

- You should always ask yourself “Why do I think this alternative will perform better?”. It will save you from lots of useless tests.

- Always test one thing on a page at a time.

- Tests may take weeks to complete. Don’t rush it and be patient.

- Testing should be continuous. Build an A/B testing strategy.

And if you still don’t have ideas of things to test, this post has 71 of them.